The Ethics of AI In Life Sciences: How Leaders Must Navigate Trust and Transparency

By Morten Nielsen, Robert Nickey, and Aoife Kearins

With incredible speed, artificial intelligence (AI) became part of our day-to-day lives, reaching a pinnacle in 2023 when at least five reputable English language dictionaries and publications crowned AI or a related term as the “word of the year”[1]. AI offers benefits to people everywhere and has the potential to transform communities, businesses, institutions, and society as a whole. However, alongside the numerous advantages of AI, ethical concerns must be given due consideration if these tools are to be implemented in a safe, transparent, and trustworthy way. It is indicative that three out of the five chosen “words of the year” revolve around concerns related to AI — Cambridge Dictionary’s and Dictionary.com’s “hallucinate” refers to the production of false information contrary to a user’s intent, presented as true and factual, while Merriam-Webster’s “authentic” underscores the importance of distinguishing genuine content from deepfakes or generated material.

Few industries stand to benefit more from AI than life sciences, which places a responsibility on leaders to consider and implement best practices for this technology. This article explores how AI is being deployed across the value chain in life sciences and the various ethical considerations this deployment raises. Most importantly, it offers recommendations for industry leaders who want to pave the way for ethical and sustainable use of AI in their operations and business practices.

Use of Artificial Intelligence in Life Sciences

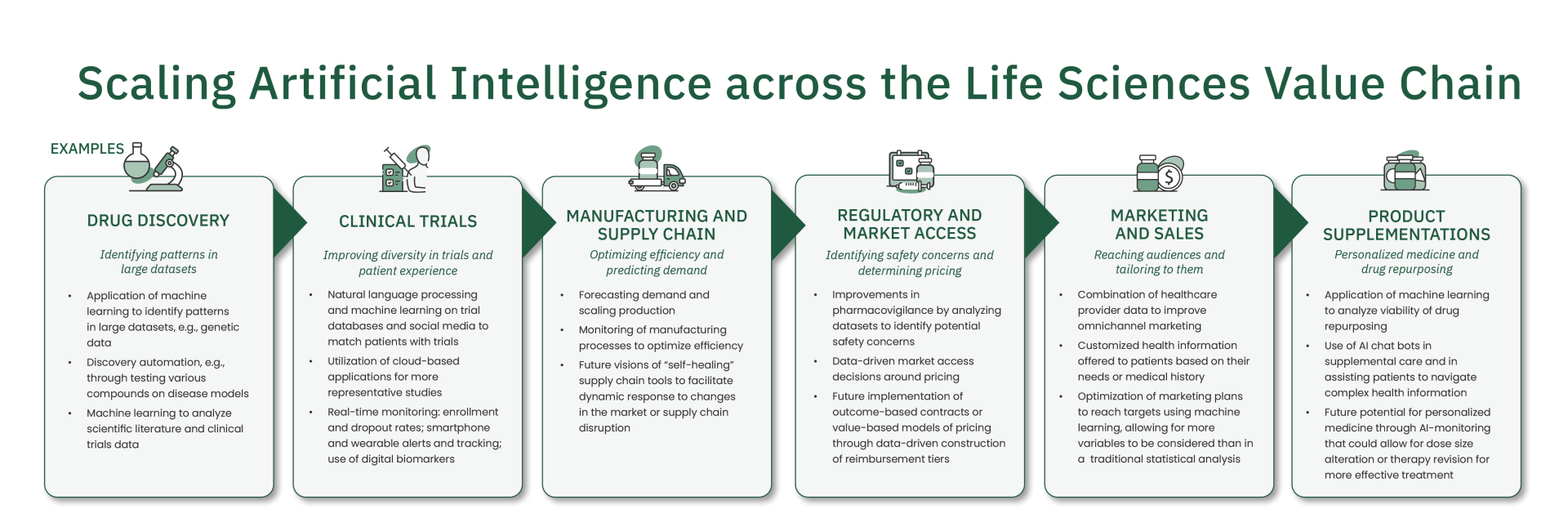

AI is making its way into every part of the life sciences value chain, providing increased efficiency and particularly data capabilities throughout (see chart below). A recent McKinsey report predicts that generative AI alone has the potential to increase revenue in the pharmaceuticals and medical products industry by 2.6-4.5% of industry revenue as a whole — making it the industry with the third-highest potential for AI-driven growth, after high tech and banking. R&D, software engineering, and marketing and sales were identified as the top three areas in which generative AI could impact life sciences firms[2].

At the R&D stage, machine learning appears to have the most potential to deliver significant value, with the capacity to sift through huge reams of data to spot patterns or make predictions. This can be applied at the research idea generation stage — such as applying machine learning algorithms to identify the most commonly cited unsolved or not fully addressed problems in the scientific literature, or gaps in past clinical trial data — or as a component of the research itself, such as in pattern recognition within genetic data or to complete theoretical testing on disease models.

At the clinical trials stage, natural language processing and wearable/smartphone intelligent monitoring become more relevant tools, allowing for easier trial participant recruitment, potential remote trials, and real-time monitoring of biomarkers or trial participation. At the supply chain stage, AI algorithmic functionality again comes to the fore, with the ability to forecast demand, scale production, and maximize efficiency.

At the regulatory and market access stage, AI capabilities can enhance pharmacovigilance in identifying potential safety concerns and also improve tools used to determine pricing. In marketing and sales, AI can ensure companies reach a wider and more tailored audience than before, through use of data-driven market segmentation and personalized targeting as well as facilitate the integration of customized health information from patients into marketing and advisory materials. Finally, AI can be used at the product supplementation stage for a wide range of complementary functions: improved omnichannel marketing for the provider, application of machine learning to analyze the viability of any drug repurposing, as well as paving the way for future developments in personalized medicine.

Ethical Considerations of Artificial Intelligence

There are a number of ethical concerns that immediately arise from the AI use cases outlined across the value chain. Five primary AI ethics concerns are of particular importance to the life sciences industry: disinformation, privacy, ownership, bias, and environmental concerns.

DISINFORMATION

Disinformation perhaps was most clearly evidenced over the past year with the rise of generative AI. In essence, by interpreting relationships between words based on patterns in large data models rather than the words’ meaning, generative AI can create content that seems coherent and accurate, but in actuality often may be unrepresentative, contradictory, or just fully incorrect.

PRIVACY

Privacy concerns about data use, storage, and sharing extend far beyond AI use, but the significantly large quantities of data required to train AI models means that this is a particularly pertinent issue. In addition, many AI models continuously train based on the queries (and underlying data) posed against those models, creating additional privacy risks. Extensive use of and repurposing of patient data allows for great potential in research, but also raises urgent queries about privacy, data ownership, and safeguarding.

OWNERSHIP

The use of AI raises significant concerns about ownership and copyrights, such as the use of copyrighted material in data training sets, the status of AI-generated text or graphics, and even considerations about whether AI tools should be “credited” in patent applications.

BIAS

Bias is almost ubiquitous in the AI ethics field, with high-profile cases in the media about voice and facial recognition software that exhibited racial biases, as well as a gender mapping program that responded “homemaker” when asked, “Man is to woman as computer programmer is to?”[3]. Bias is a particularly pervasive concern because of the concept of “bias in, bias out”: most of the time, the program is not learning bias from a prejudiced programmer, but from consuming a large amount of data from a highly biased world. This issue of AI-generated bias can easily rear its head in life sciences applications, where a non-representative group of participants in a clinical trial — skewed, for instance, by gender or race — can end up becoming used to train models or assess viability of repurposing, which could end up specific to a certain group.

ENVIRONMENTAL CONCERNS

Finally, the use of AI presents an array of environmental issues, evidenced by extensive research. Namely, research from MIT shows that the world’s computer and information storage sector have a larger carbon footprint than the airline industry [4]. Similarly, according to results published by the University of Massachusetts, AI model training can produce about 626,000 pounds of carbon dioxide, or the equivalent of around 300 round-trip flights between New York and San Francisco — nearly 5 times the lifetime emissions of the average car [5].

Considering these ethical issues is of utmost importance. In the past, limitations of technology led to “AI winters” in the early 1970s and late 1980s, where the “hype cycles” from funders far exceeded the capabilities and results of then-available technology. With the rapid development of increasingly advanced AI technology[6] in recent years, getting AI wrong on the human and ethical considerations could be the greatest barrier to largescale AI development and implementation.

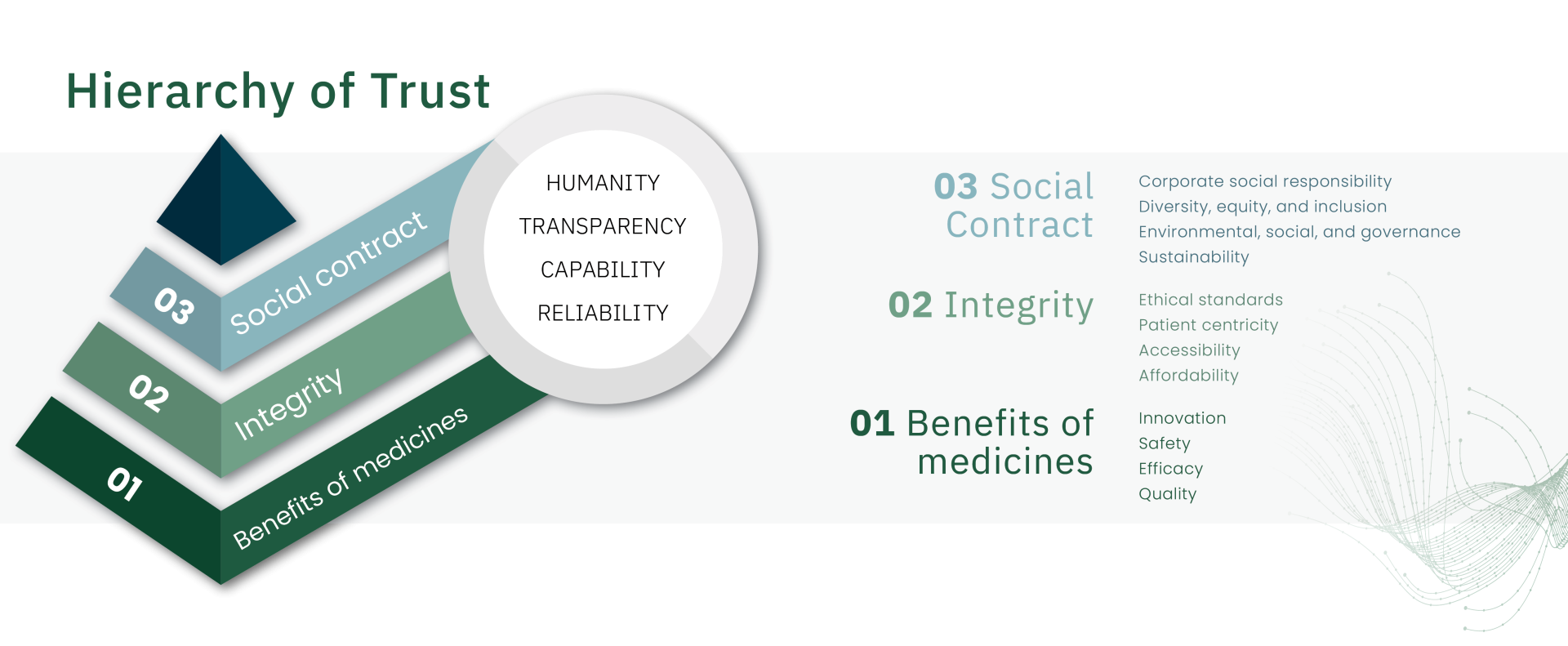

In “Trust: A Value Imperative to Pharma,”[7] WittKieffer authors outlined the hierarchy of trust: a framework for pharmaceutical and life sciences companies designed to demystify the difficult-to-define and measure concept of trust (see graphic). The hierarchy of trust is composed of three building blocks and, like Maslow’s hierarchy of needs, each base element must be met before ascending to the next. The second tier of this hierarchy, “integrity,” includes ethical standards, and it is under this heading we place ethical AI. Developing ethical and transparent AI practices is a fundamental part of how life sciences companies must operationalize trust. Leaders in the industry must prioritize integrity and ethical AI, or the risk of non-transparent practices could significantly damage or weaken patient trust.

Responsible Adoption of Trustworthy Artificial Intelligence

As demonstrated above, AI has tremendous potential to bring increased value and efficiency to the life sciences industry. At the same time, it is imperative for leaders to acknowledge that its implementation and application raise valid ethical concerns. In this context, more than ever, life sciences executives need strategic and long-term visionary competencies to effectively navigate the foreseen and unforeseen consequences that AI may present years or even decades to come.

Guiding principles for leaders:

ACT NOW

As AI is constantly evolving and improving, it is difficult for regulation to keep up, with relatively little guidance or compliance structures in place for organizations implementing AI tools. However, this does not mean that leaders should wait until they are required to implement checks and balances. Starting now will allow leaders to show patients and the broader community that this is a priority issue and will position the company well for future regulatory structures.

PRIORITIZE TRANSPARENCY

Implementing transparency into every process where AI is used is essential to gaining and maintaining patient trust. Leaders should ensure that they are transparent on their AI use cases, and on the specifics of these: for instance, how and why machine learning algorithms are used, and on what datasets. Clinical trial participants should be informed if their data will be repurposed for different uses in the future. Data provenance — the detailed documentation of the origin, ownership, processing, editing, and transformation of data through its lifecycle — should be implemented consistently and be presented alongside implementation or results. Transparency protocols should be reviewed and updated regularly to reflect the fast-moving pace of AI development.

VIEW AI AS A COMPLEMENTARY TOOL — NOT A REPLACEMENT

AI has fantastic capabilities to optimize efficiency and deal with more data, but it cannot replace human judgment. Human oversight can negate some of the potential ill-effects of AI, such as data-learned bias or false results.

ADOPT A LEARNING MINDSET

AI technology, and the human and ethical considerations that come along with it, is changing and developing every day. A willingness for and commitment to continuous learning will allow leaders to adapt and develop as the technology does.

ENSURE ACCOUNTABILITY STRUCTURES ARE IN PLACE

Not everything can be controlled, and with technology that is so new and dynamic, errors and mistakes will be made. This is why it is important to implement clear accountability structures, with checks and balances at each stage, to ensure that any errors or issues are caught as early as possible and have well-defined due processes for their resolution.

PROMOTE ORGANIZATION-WIDE ALIGNMENT

As shown in the earlier diagram, AI is already present throughout the life sciences value chain. Therefore, it is important that leaders throughout the organization — from R&D to supply chain logistics to legal and regulatory — are aligned on company use cases and approaches to ethical AI.

AI POLICIES ARE CONSISTENT WITH OTHER COMPANY POLICIES

As life sciences companies place a greater focus on ESG (environmental, social, and governance) — another component of their trust-building process — they must examine the carbon footprint of their AI and data operations.

PROVIDE OVERSIGHT AND GOVERNANCE

The CEO and the Board should set the boundaries for ethical AI in an organization. They are responsible for ensuring oversight and governance in the use of AI, providing strategic direction, setting their organization’s specific definition and approaches for ethical AI, and defining ethical guidelines. Furthermore, they should demonstrate leadership and act as role models in establishing a culture prioritizing ethics and responsible practices, alongside engaging with internal and external stakeholders to promote ethical AI adoption that is aligned with the company’s values.

These guiding principles should be a starting point for executives in life sciences who aim to position themselves and their firms as pioneers in ethical AI adoption — a practice that will undoubtedly become an integral part of companies’ trustbuilding activities. By laying the groundwork now and embracing policies of transparency and a people-first approach, companies will be well-prepared to demonstrate their commitment to prioritizing public trust.

About the Authors

Morten Nielsen, NACD.CD, is a Senior Partner in WittKieffer’s Life Sciences Practice, Robert Nickey is a Senior Associate in WittKieffer’s Interim Leadership (Life Sciences & Investor-Backed Healthcare) Practice, and Aoife Kearins is an Insights Strategist for WittKieffer’s Commercial Strategy & Insights team.

REFERENCES

1. Cambridge Dictionary, The Cambridge Dictionary Word of the Year 2023; Collins Dictionary, Collins — The Collins Word of the Year 2023 is… (collinsdictionary.com); Dictionary.com, Dictionary.com’s 2023 Word Of The Year Is… | Dictionary.com; The Economist, Our word of the year for 2023 (economist.com); Merriam-Webster Dictionary, Word of the Year 2023 | Authentic | Merriam-Webster

2. McKinsey, 2023. “What’s the future of generative AI? An early view in 15 charts.”

3. Bolukbasi, Tolga, Chang, Kai-Wen, Zou, James, Saligrama, Venkatesh, and Kalai, Adam, 2016. “Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings.” Retrieved from https://arxiv.org/abs/1607.06520

4. Gonzales Monserrate, Steven, 2021. “The Cloud is Material: On the Environmental Impacts of Computation and Data Storage.” MIT Case Studies in Social and Ethical Responsibilities of Computing Series

5. Hao, Karen, 2019. “Training a single AI model can emit as much carbon as five cars in their lifetimes.” MIT Technology Review

6. Russell, Stuart and Norvig, Peter, 2021. “Artificial Intelligence: a Modern Approach.” Pearson, 4th edition

7. Foster, Lynn, Nielsen, Morten, and Serikova, Saule, 2023. “Measuring Pharma’s Trust Performance.” Pharmaceutical Executive magazine